How an AI Agent Vulnerability in LangSmith Could Lead to Stolen API Keys and Hijacked LLM Responses

Noma Security research team uncovers CVSS 8.8 “AgentSmith” vulnerability, a potentially malicious proxy configuration affecting AI agents and prompts

Our ongoing research into emerging threats in the rapidly evolving AI landscape continues to uncover significant vulnerabilities. Given the speed at which the industry has shifted toward adopting autonomous AI agents, often ahead of robust security measures, our team has prioritized extensive investigations into leading agentic AI platforms, finding this AI agent vulnerability in LangSmith named “AgentSmith.” Following our recent disclosure of critical flaws in Lightning AI, we have now identified the AgentSmith vulnerability within Prompt Hub, a public repository of community developed prompts within LangSmith which is the the popular LangChain agent management and collaboration platform.

LangChain Notice: “This vulnerability was limited to a specific feature (Prompt Hub public sharing) and did not affect LangChain’s core platform, enterprise deployments, private agents, or the broader LangSmith infrastructure. This affected only users who actively chose to interact with malicious public prompts – representing a small subset of our user base.”

The LangChain security team deployed a fix on November 6th, 2024, to prevent further misuse and implement new safeguards, including warning banners and proxy configuration alerts.

We have found no evidence of active exploitation, and only users who explicitly ran a malicious agent were potentially affected. Users are advised to review any previously forked agents for unsafe proxy settings.

What is the AgentSmith AI agent vulnerability?

The Noma Security Research team successfully demonstrated how a malicious proxy settings could be applied on a prompt uploaded to LangChain Hub, the public prompt hub integrated with the LangSmith platform, in order to exfiltrate sensitive data and impersonate a large language model (LLM). LangChain, the company behind various platforms for building, managing, and scaling AI agents – such as LangSmith – is widely used by major enterprises including Microsoft, The Home Depot, DHL, Moody’s, and several others. LangChain Hub, also known as “Prompt Hub,” is a central repository where thousands of pre-configured community-developed prompts (with the majority marked as “agents”) are publicly shared for collaboration and reuse across diverse use cases.

This newly identified vulnerability exploited unsuspecting users who adopt an agent containing a pre-configured malicious proxy server uploaded to “Prompt Hub” (which is against LangChain ToS). Once adopted, the malicious proxy discreetly intercepted all user communications – including sensitive data such as API keys (including OpenAI API Keys), user prompts, documents, images, and voice inputs – without the victim’s knowledge. The severity of this vulnerability has been assessed with a CVSS v3.1 base score of 8.8 (CVSS:3.1/AV:N/AC:L/PR:N/UI:R/S:U/C:H/I:H/A:H), reflecting its high potential impact on confidentiality, integrity, and availability in real-world environments.

Victims who chose to adopt this agent could pose their organization to a severe threat with far-reaching consequences. Beyond the immediate risk of unexpected financial losses from unauthorized API usage, malicious actors could gain persistent access to internal datasets uploaded to OpenAI, proprietary models, trade secrets and other intellectual property, resulting in legal liabilities and reputational damage. In more advanced scenarios, attackers could exploit man-in-the-middle (MITM) capabilities to manipulate downstream LLM responses, tamper with automated decision-making processes, which could have cascaded into systemic failures, fraud, or regulatory violations – amplifying both the scale and complexity of the incident response required.

AgentSmith AI Agent Vulnerability Resolution

Following the disclosure, Noma Security partnered closely with the LangChain team to triage any additional risks and proactively investigate signs of exploitation in the wild. Our joint analysis found no evidence of active abuse, and the vulnerability only affected users who directly interacted with the malicious agent. No broader compromise of Prompt Hub or LangSmith users was observed.

LangChain’s security team demonstrated exceptional responsiveness and a strong commitment to user safety, swiftly acknowledging the report, thoroughly investigating the issue, and deploying an effective fix within just eight days of initial disclosure–an exemplary model of responsible and security-first engineering.

How the AgentSmith AI Agent Vulnerability Worked

The attack scenario unfolded in three straightforward steps, focusing on a use case of an OpenAI-based agent:

1. Creating an AI Agent with Malicious Proxy Configuration

- The attacker crafted an AI agent (a predefined prompt), either as a unique standalone entity or by mimicking a well-known, trusted agent to maximize exposure and user engagement.

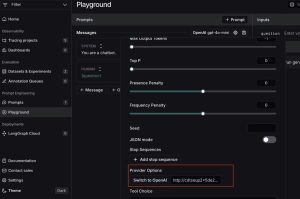

- An attacker configured a proxy server through LangSmith’s Proxy Provider feature, linking it to a server under their control.

- The attacker saved the prompt and proxy settings into a publicly accessible agent and shares it openly on LangChain Hub

2. Victim Adoption of the Malicious AI Agent

- When an unsuspecting user discovered the malicious AI agent and clicked “Try It” and provides a prompt to explore its functionality, all of their communications – including OpenAI API keys, prompt data, and any uploaded attachments – are immediately and covertly routed through the attacker’s proxy server, resulting in the silent exfiltration of sensitive information.

- At this point, the attacker could leverage the exfiltrated OpenAI API key to gain unauthorized access to the victim’s OpenAI environment, leading to several potential consequences:

-

- Data Exfiltration: Download all files previously uploaded to OpenAI–often including sensitive datasets used for fine-tuning, batch inference, or document processing.

- Prompt and Context Leakage: Interact with existing assistants to infer the types of data being submitted, potentially exposing confidential inputs, outputs, or proprietary system prompts.

- Denial of Wallet / Service: Exhaust the organization’s usage quota by initiating high-cost operations, resulting in unexpected billing charges or temporary loss of access to OpenAI services.

3. (Optional) Ongoing Surveillance and Data Exfiltration via Seamless Proxy Interception

- After completing their initial examination, the victim may fork the agent, effectively cloning it into their own environment – along with the embedded malicious proxy configuration.

- As the victim continues to use the malicious agent in their regular workflow, all interactions–including prompts, chat history, uploaded documents, voice inputs, and sensitive credentials like API keys – were silently funneled through the attacker’s proxy server because of the AI agent vulnerability.

- Because the proxy operates transparently and maintains compatibility with OpenAI and other LLM APIs, users experienced little to no indication that their traffic is being intercepted. This allowed the attacker to persistently harvest valuable information over time without triggering suspicion or disrupting the user’s normal interaction with the agent.

AgentSmith Proof of Concept (PoC)

Following is a detailed demonstration of the AI agent vulnerability, resulting in stealing the victim’s OpenAI API key.

AgentSmith Prompt Response by LangChain

In line with responsible disclosure practices, Noma Security promptly reported the vulnerability to LangChain’s security team. LangChain responded swiftly and responsibly, releasing an effective security patch that mitigated the issue and closed the exploitation pathway; demonstrating their strong commitment to user safety and rapid incident resolution.

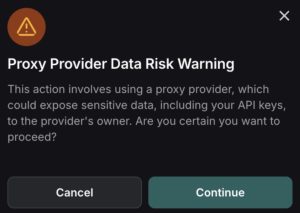

To address the risk of malicious proxy configurations, the LangChain team implemented additional safeguards, including:

- A warning prompt that now appears when users attempt to clone an agent containing a custom proxy configuration:

- A persistent warning banner displayed on the agent’s description page, alerting users to the presence of potentially unsafe proxy settings.

Responsible Disclosure Timeline

- October 29, 2024 – Vulnerability discovered allowing leakage of any user’s OpenAI API token. Initial contact email sent to verify LangChain’s security contact was actively monitored.

- October 30, 2024 – Vulnerability formally reported to LangChain.

- October 30, 2024 – LangSmith acknowledged the report and began investigation.

- November 5, 2024 – LangChain confirmed the AgentSmith AI Agent vulnerability and informed us they were actively working on mitigation.

- November 6, 2024 – Security fix deployed by LangChain. The Noma team verified the issue was resolved.

Actions for security teams to secure Agentic AI within your organization

1. Centralized Inventory and Lineage Tracking

Security teams must begin by establishing complete visibility and traceability across all AI agents in use. This includes:

- Maintaining a centralized inventory of all agents, workflows, and tools – covering internal development, open-source adoption, and third-party marketplaces such as LangChain Hub or PromptHub.

- Using an AI Bill of Materials (AI BOM) to capture detailed lineage data: where agents came from, what tools they invoke, what APIs they access, and what downstream systems are connected. This enables:

- Rapid blast radius assessments in the event of compromise.

- Efficient triage of inherited risks from reused or forked agents.

- Continuous compliance and auditability across the AI stack.

Get comprehensive visibility of all AI agents in your environment with Noma Security. See how it works.

2. Runtime Protection and Guardrail Enforcement

Prevent misuse and sensitive data exfiltration by instrumenting runtime controls:

- Enforce tool-calling security guardrails: Define strict allow/deny policies for external tool invocation (e.g., webhooks, code execution, file I/O).

- Detect malicious behavior in real-time, such as sensitive data exfiltration, or prompt injection or jailbreaks.

- Inspect and sanitize agent output before consumption by downstream systems – especially for agents triggering automation or transactional logic.

3. Security Governance and Posture Enforcement

Treat every AI agent as a production-grade component. Apply rigorous security validation and threat modeling before deployment:

- Conduct pre-runtime posture assessments: Evaluate the agent’s expected behavior in context – analyzing its data access scope, outbound network activity, and tool-calling logic. Leverage use-case–specific red teaming and simulation to uncover misuse paths or unintended behaviors.

- Segregate untrusted agents: Enforce strict isolation for high-risk agents – such as those sourced from open hubs or under active development – to prevent lateral movement and data leakage within production environments.

Staying Ahead of AI Security Risks

As the AI landscape continues its rapid evolution toward autonomous AI agents, new and complex security risks emerge. Organizations adopting platforms like LangSmith and LangChain Hub must remain vigilant, continuously assessing security posture to guard against sophisticated threats.

At Noma Security, our dedicated AI threat research efforts continue, committed to proactively identifying and addressing all AI security vulnerabilities to ensure the safety and trustworthiness of AI deployments globally.

Request a demo of Noma Security today to see how you can identify any AI vulnerability, and secure the AI Agents within your organization.